Testing the Foundation of Special Relativity

The Düsseldorf team in the laboratory. From left to right: Ch. Eisele, A. Yu. Nevsky and S. Schiller.

The Düsseldorf team in the laboratory. From left to right: Ch. Eisele, A. Yu. Nevsky and S. Schiller.[This is an invited article based on recent work of the author and his collaborators -- 2Physics.com]

Author: Ch. Eisele

Affiliation: Institute for experimental physics, Heinrich-Heine-Universität Düsseldorf, Germany

Contact: christian.eisele@uni-duesseldorf.de

Since Albert Einstein developed the theory of special relativity (TSR) during his annus mirabilis in 1905 [1], we learned that all physical laws should be formulated invariant under a special class of transformations, the so called Lorentz transformations. This "Lorentz Invariance" (LI) follows from two postulates; these are general, but experimentally testable statements.

The first postulate is Einstein's principle of relativity. It states, that all laws describing the change of physical states do not depend on the choice of the inertial coordinate system used in the description. In this sense, all inertial coordinate systems (i.e. systems moving with constant velocity relative to each other) are equivalent.

The second postulate states the universality of the speed of light: in every inertial system light propagates with the same velocity, and this velocity does not depend on the state of motion of the source or on the state of motion of the observer. We may say that the observer’s clocks and rulers behave in such a way that (s)he cannot determine any change in the value of the velocity.

Fig. 1 : General Relativity, as well as the Standard Model, assume the validity of (local) Lorentz Invariance. Both theories might be only low-energy-limits of a more fundamental theory unifying all fundamental forces and exhibiting tiny violations of Lorentz Invariance.

Fig. 1 : General Relativity, as well as the Standard Model, assume the validity of (local) Lorentz Invariance. Both theories might be only low-energy-limits of a more fundamental theory unifying all fundamental forces and exhibiting tiny violations of Lorentz Invariance.Today, the laws of Special Relativity lie, either as local or global symmetry, at the very basis of our accepted theories of the fundamental forces (see fig.1), the theory of General Relativity, and the Standard Model of the electroweak and strong interactions. Physicists are trying hard to develop a single theory, a Grand Unified Theory (GUT), that describes all the fundamental interactions in a common way, i.e. including a quantum description of gravitation. Over the last few decades candidate theories for a unification of the fundamental forces have been developed, e.g. string theories and loop quantum gravity. These actually do not rule out the possibility of Lorentz Invariance violations. Thus it could be that Lorentz invariance may only be an approximate symmetry of nature. These theoretical developments stimulate experimentalists to test the basic principles, on which the theory of Special Relativity is built, with the highest possible precision allowed by technology.

To test Lorentz Invariance (LI) one needs a theory, which can be used to interpret experimental results with respect to the validity of LI, a so-called test theory. Two test theories are commonly used, the Robertson-Mansouri-Sexl (RMS) theory [2-5], a kinematical framework dealing with generalized transformation rules, and the Standard Model Extension (SME) [6], a dynamical framework based on a modified Lagrangian of the Standard Model with additional couplings.

Within the RMS framework possible effects of Lorentz Invariance violation are a modified time dilation factor, a dependency of the speed of light on the velocity of the source or the observer, and an anisotropy of the speed of light. Three experiments are sufficient to validate Lorentz invariance within this model: experiments of the Ives-Stillwell type [7], the Kennedy-Thorndike type [8] and of the Michelson-Morley-type [9].

The SME, in contrast, allows in principle hundreds of new effects, and for an interpretation of a single experiment one often has to restrict oneself to certain sectors of the theory. For the so-called minimal QED sector of the theory possible effects are, e.g., birefringence of the vacuum, a dispersive vacuum and an anisotropy of the speed of light.

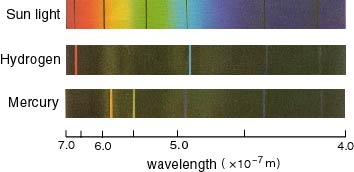

Upper limits on a possible birefringence of the vacuum or for a possible dispersive character of the vacuum can be derived from astrophysical observations of the light of very distant galaxies [e.g. 10,11,12], respectively the light emitted during supernova explosions. These are extremly sensitive tests. However, one has to rely on the light made available by sources in the universe and to make certain assumptions on the source of the light.

Laboratory experiments, on the other hand, allow for a very good control of the experimental circumstances. Experiments include, e.g., measurements of the anomalous g-factor of the electron [13] or measurements of the time dilation factor using fast beams of 7Li+ ions [14]. Even data of the global positioning system (GPS) can be used to test Lorentz invariance [15].

Recently, at the Universität Düsseldorf, we have performed a test of the isotropy of the speed of light with a Michelson-Morley type experiment [16]. The main component of the setup is a block of ultra-low-expansion-coefficient glass (ULE), in which two optical resonators with high finesse (F = 180 000) are embedded under an angle of 90° (see fig.2). The resonance frequencies ν1, ν2 of the resonators are a function of the speed of light, c, and the length Li of the respective resonator, νi = nic/2Li, ni being the mode number of the light mode oscillating in the resonator. Thus, if one ensures the length of the resonator to be stable, one can derive limits on a possible anisotropy of the speed of light c = c(θ) by measuring the resonance frequencies of the resonators as a function of the orientation of the resonators. In our setup we measure the difference frequency (ν1 - ν2 ) between the two resonators by exciting their modes by laser waves. Compared to an arrangement with a single cavity and comparison with, say, an atomic reference, the hypothetical signal due to an anisotropy is doubled in size.

Fig 2: The ULE-block containing the resonators (left). For the measurement the block is actively rotated and the frequency difference ν1 – ν2 is measured as a function of the orientation (right).

Fig 2: The ULE-block containing the resonators (left). For the measurement the block is actively rotated and the frequency difference ν1 – ν2 is measured as a function of the orientation (right).A cross section of the complete experimental apparatus is shown in figure 3. Many different systems have been implemented to suppress systematic effects. For example, the ULE-block containing the resonators is fixed in a temperature stabilized vacuum chamber to ensure a high length stability. This is placed on breadboard I and is surrounded by a foam-padded wooden box for acoustical and thermal isolation. Furthermore, the laser and all the optics needed for the interrogation of the resonators, also on breadboard I, are shielded by another foam-padded box. To isolate the optical setup from vibrations, breadboard I is placed on two active vibration isolation supports (AVI), that suppress mechanical noise coming from the rotation table and the floor.

Fig.3 Cross section of the experimental setup. A: air bearings, B: piezo motors, C: voice coil actuators, D: tilt sensors, E: air springs system, AVI's: active vibration isolation supports

Fig.3 Cross section of the experimental setup. A: air bearings, B: piezo motors, C: voice coil actuators, D: tilt sensors, E: air springs system, AVI's: active vibration isolation supportsIn addition, the AVI allows as well for active control of the tilt of breadboard I by means of voice coil actuators (C). This is necessary since a varying tilt leads to varying elastic deformations of the resonator block, and thus systematically shifts the resonance frequencies. The tilt is measured using electronic bubble-level sensors (D) with a resolution of 1 µrad. The described system and a rack carrying all the electronics used for the frequency stabilisation and other servo systems are standing on breadboard II, which is fixed to the rotor of a high precision air bearing (A) rotation table. This is used to actively change the orientation of the resonators with a rotation rate of ωrot = 2π / 90s. To minimize systematic effects due to tilt modulations, the rotation axis can be aligned in the direction of local gravitation using an air spring system (E). A tower around the complete setup with multilayered elements on the sides and ceiling plates containing thermo-electric coolers allows for thermal and acoustical isolation from the surrounding.

With this apparatus we have performed measurements over a period of more than 1 year, taken in 46 datasets longer than 1 day. From these datasets we have used 135 000 single rotations to extract upper limits for the parameters of the RMS and SME describing a potential anisotropy of the speed of light.

The mentioned models, the RMS and the SME, predict variations of the frequency difference Δν = ν1-ν2 on several timescales. Due to the symmetry of the resonator system and the active rotation θ(t) = ωrot·t, the models predict a variation of Δν with a frequency 2 ωrot , Δν(θ) = 2Bν0 sin2θ(t) + 2Cν0 cos2θ(t), where ν0 is the mean optical frequency (281 THz). Thus, for every single rotation we determine the modulation amplitudes 2Bν0 and 2Cν0 at this frequency (see fig.4). Due to the rotation and the revolution of the earth around the sun, these amplitudes will show, if Lorentz Invariance is violated, variations on the timescale of half a sidereal day, a sidereal day, and on an annual scale. The size of these modulations is directly connected to the parameters of the two test theories, which can be derived from the modulation amplitudes via fits.

Fig.4 Histograms of the determined modulation amplitudes due to active rotation. The mean values are (10 ± 1) mHz for 2Bν0 and (1± 1) mHz for 2Cν0, corresponding to (3.5 ± 0.4)·10-17 for 2B and (0.4 ± 0.4)·10-17 for 2C.

Fig.4 Histograms of the determined modulation amplitudes due to active rotation. The mean values are (10 ± 1) mHz for 2Bν0 and (1± 1) mHz for 2Cν0, corresponding to (3.5 ± 0.4)·10-17 for 2B and (0.4 ± 0.4)·10-17 for 2C.Within the RMS theory a single parameter combinaton (δ-β-1/2) describes the anisotropy. From our measurements we can deduce a value of (-1.6 ± 6.1)•10-12, thus yielding an upper 1σ limit of 7.7•10-12 . This means, that the anisotropy of the speed of light, defined as (1/2)•Δc(π/2)/c, is probably less than 6•10-18 in relative terms.

Within the SME test theory 8 different parameters can be derived using our measurements. For most of these coefficients we can place upper bounds on a level of few parts in 1017, except for one coefficient, κe-ZZ, which is determined from the mean values of the modulation amplitudes 2B and 2C, and is most seriously affected by systematic effects. For this coefficient we can only limit the value to below 1.3•10-16 (1σ). For all the coefficients our experiment improved the upper limits for a possible Lorentz Invariance violation by more than one order of magnitude compared to previous work [17-22] and no significant signature of an anisotropy of the speed of light is seen at the current sensitivity of the apparatus.

Currently, the institute is working on an improved version of the apparatus. The goal is a further significant improvement of the upper limits within the next years.

References:

[1] "Zur elektrodynamik bewegter körper", A. Einstein, Annalen der Physik, 17:891 (1905). Article.

[2] "Postulate versus Observation in the Special Theory of Relativity",

H.P. Robertson, Rev. Mod. Phys., 21(3):378–382, (Jul 1949). Article.

[3] "A test theory of special relativity: I. Simultaneity and clock synchronization",

Reza Mansouri and Roman U. Sexl, Gen. Rel. Grav., 8(7):497 (1977). Abstract.

[4] "A test theory of special relativity: II. First order tests",

Reza Mansouri and Roman U. Sexl, Gen. Rel. Grav., 8(7):515, 1977. Abstract.

[5] "A test theory of special relativity: III. Second-order tests",

Reza Mansouri and Roman U. Sexl, Gen. Rel. Grav., 8(10):809 (1977). Abstract.

[6] "Lorentz-violating extension of the standard model",

D. Colladay and V. Alan Kostelecký, Phys. Rev D, 58(11):116002 (1998). Abstract.

[7] "An Experimental Study of the Rate of a Moving Atomic Clock. II",

Herbert E. Ives and G.R. Stilwell, J. Opt. Soc. Am., 31:369 (1941). Abstract.

[8] "Experimental Establishment of the Relativity of Time",

Roy J. Kennedy and Edward M. Thorndike, Phys. Rev., 42:400 (1932). Abstract.

[9] A.A. Michelson and E.W. Morley, American Journal of Science, III-34(203), (1887)

[10] "Limits on the Chirality of Interstellar and Intergalactic Space",

M. Goldhaber and V. Trimble, J. Astrophy. Astr., 17:17 (1996). Article.

[11] "Is There Evidence for Cosmic Anisotropy in the Polarization of Distant Radio Sources?",

S.M. Carroll and G.B. Field, Phys. Rev. Lett., 79(13):2394-2397 (1997). Abstract.

[12] "A limit on the variation of the speed of light arising from quantum gravity effects",

J. Granot , S. Guiriec, M. Ohno, V. Pelassa et al., Nature, 08574, doi:10.1038 (2009). Abstract.

[13] "A dynamical test of special relativity using the anomalous electron g-factor",

M. Kohandel, R. Golestanian, M. R. H. Khajehpour, Physics Letters A, 231, 5-6 (1997). Abstract.

[14] "Test of relativistic time dilation with fast optical atomic clocks at different velocities",

Sascha Reinhardt, Guido Saathoff, Henrik Buhr, Lars A. Carlson, Andreas Wolf, Dirk Schwalm, Sergei Karpuk, Christian Novotny, Gerhard Huber, Marcus Zimmermann, Ronald Holzwarth, Thomas Udem, Theodor W. Hänsch, Gerald Gwinner, Nature Physics, 3, 861-864 (2007). Abstract.

[15] "Satellite test of special relativity using the global positioning system",

P. Wolf and G. Petit, Phys. Rev. A, 56(6):4405-4409 (1997). Abstract.

[16] "Laboratory Test of the Isotropy of Light Propagation at the 10-17 Level",

Ch. Eisele, A. Yu. Nevsky and S. Schiller, Phys. Rev. Lett. 103, 090401 (2009). Abstract.

[17] "Tests of Relativity Using a Cryogenic Optical Resonator", C. Braxmaier, H. Müller, O. Pradl, J. Mlynek, A. Peters, S. Schiller, Phys. Rev. Lett., 88(1):010401 (2001). Abstract.

[18] "Modern Michelson-Morley Experiment using Cryogenic Optical Resonators",

Holger Müller, Sven Herrmann, Claus Braxmaier, Stephan Schiller and Achim Peters, Phys. Rev. Lett., 91:020401 (2003). Abstract.

[19] "Test of the Isotropy of the Speed of Light Using a Continuously Rotating Optical Resonator",

Sven Herrmann, Alexander Senger, Evgeny Kovalchuk, Holger Müller and Achim Peters, Phys. Rev. Lett., 95(15):150401 (2005). Abstract.

[20] "Test of constancy of speed of light with rotating cryogenic optical resonators",

P. Antonini, M. Okhapkin, E. Göklü and S. Schiller, Phys. Rev. A, 71:050101 (2005). Abstract.

[21] "Improved test of Lorentz invariance in electrodynamics using rotating cryogenic sapphire oscillators", Paul L. Stanwix, Michael E. Tobar, Peter Wolf, Clayton R. Locke, and Eugene N. Ivanov, Phys. Rev. D, 74(8):081101 (2006). Abstract.

[22] "Tests of Relativity by Complementary Rotating Michelson-Morley Experiments", Holger Müller, Paul Louis Stanwix, Michael Edmund Tobar, Eugene Ivanov, Peter Wolf, Sven Herrmann, Alexander Senger, Evgeny Kovalchuk, and Achim Peters, Phys. Rev. Lett., 99(5):050401 (2007). Abstract.

Labels: Einstein, Precision Measurement